INTRODUCTION

Live scoring and artificial intelligence (AI)-based music generation are two fields where a big volume of research has already taken place, providing numerous approaches to the realisation of such projects. AI-generated music is rooted in Computer Aided Composition (CAC), which itself consists of a large collection of works, dating back to the 1950s. More recently, textual AI-based content generation has also seen much development, with generic tools like OpenAI’s ChatGPT and Google’s Bard having drawn a lot of attention. This article presents a new approach to AI-aided composition through textual score writing combined with a character-based Gated Recurrent Unit (GRU) Recurrent Neural Network (RNN). The RNN is a type of AI that can be trained on sequences of data, and then generate similar sequences. One such example is text generators. The two basic types of RNNs are the Long Short-Term Memory (LSTM), and the GRU, which are two different approaches to solving issues with traditional RNNs, that concern vanishing or exploding coefficients of the network during the training process. This means that the values of these coefficients become either too small or too big to make sense or to be handled by a computer.

The approach analysed in this article is based on LiveLily, a bespoke software for live scoring through live coding, and an RNN GRU model created with the TensorFlow library. Live coding is a practice where a computer musician or other artist uses computer code and writes that code live during a performance. LiveLily uses a subset of the Lilypond language. Lilypond is a textual language for score engraving (1). Its language is simple yet versatile and powerful, enabling users to write complex Western notation music scores in an expressive way. The following lines create two bars in a 4/4 time scale with the C major scale in quarter notes starting from middle C, going up one octave, to be played fortissimo:

\time 4/4

c'4\ff d' e' f' \bar "|" g' a' b' c'' \bar "|."For musicians familiar with the Western notation format, using this language can be very expressive as it uses symbols found in sheet music and terms used in music schools in the Western world. LiveLily is inspired by this language and uses a subset of it to create sequencers and animated scores. It is written with the openFrameworks toolkit and uses a slightly modified version of the Lilypond language with a bit of syntactic sugar – a term used in computer science for syntax that usually enables more concise coding – to enable fast typing for live coding sessions. It can be used either with acoustic instruments as a live scoring system or with electronic music systems that support the MIDI or the Open Sound Control (OSC) communication protocol (2). This is an open-source project, hosted on GitHub.

The idea behind this project is to train a GRU RNN with music phrases in the LiveLily language – a programming language – and then interact with it during a live coding session. Once the RNN is trained, it can provide suggestions during performance based on what the live coder seeds it with. The live coder can then decide whether he/she will use these phrases intact, edit them, or discard them. Since program synthesis is an AI practice with research dating back to the 1940s and researchers are still active in this field (3), this project has many resources to draw and learn from.

RELATED WORKS

Computer-generated music precedes AI-based music generation. The former is a broad field of practice with many branches. From soundtracks generated through image analysis while looking at a train window (4), to audio mashups (5), or even auto-foley tracks (6), one can find many examples where the computer is solely responsible for the generation of music, once the algorithm has been defined. Besides enabling computer-generated music, LiveLily is a system for live scoring, a field with many research and artistic projects. In this section I will outline related works in all related fields: AI-generated music, computer-generated music, computer-aided composition (CAC), live scoring works, and live coding combined with AI.

COMPUTER-AIDED COMPOSITION AND SOFTWARE

Computer-generated music has its roots in CAC. The first such work dates back to 1956, the Illiac Suite by L. M. Hiller and L. M. Isaacson (7). Another early example is the ST/10-1, 080262 by Iannis Xenakis, a composition for acoustic ensemble realised with calculations made on the IBM-7090 computer (8). According to Curtis Roads, numerous other composers around the time of Hiller or later used computers to generate music (9). Herbert Bruen and John Myhill, James Tenney, Pierre Barbaud, Michel Phillipot, and G. M. Koenig are among these composers. In the context of related works, CAC software should also be mentioned in this section. Such software is nothing new, with the UPIC system where the user could draw various sound parameters being developed and used in the 1970s (10). Other software has been developed more recently, including Open Music (OM) (11). More software can be considered CAC software, but they lean more toward live scoring, so they are mentioned in the respective section.

GENERATIVE SOUNDSCAPES

There are many soundscapes and sound-walks that are also computer-aided or computer-generated. Hazzard et al. created a sound-walk for the Yorkshire Sculpture Park based on GPS data (12). Lin et al. created Music Paste, a system that chooses music clips from a collection of audio that it considers appropriate, concatenating them seamlessly by creating transposition segments to compensate for changes in tempo or other parameters (13). Manzelli et al. create music by combining symbolic with raw audio to capture mood and emotions (14). Symbolic here refers notes, while raw audio refers to waveforms.

LIVE SCORING WORKS AND SOFTWARE

CAC tends to blend with live scoring works, where usually the composition that is written live is aided by a computer. Comprovisador is a live scoring and CAC work for ensemble and soloist (15). The computer in this work “listens” to the soloist, decodes his/her playing, and creates a score for the ensemble, based on this analysis. A work that is closer to LiveLily is No Clergy by Kevin C. Baird, a live scoring composition using two programming languages, Python and Lilypond (16). This work creates the composition by using audience input on the duration and dynamics of notes, among other data. John Eacott sonifies tidal flow data in his work Flood Tide (17). This composition for acoustic ensemble aims at long performances of slow-changing music, as the tidal flow data are updated every five seconds through a sensor placed in the water.

Live scoring software include the bach external library for Max (18), Maxscore (another Max external object) (19), and INScore (20). OM is also a live scoring software, even though it was initially developed as a CAC software, with its live scoring features added later on (21).

LIVE CODING AND AI

The combination of live coding and AI is not entirely new, although the attempts to combine the two are not numerous. If one refers to the Live Coding book (22) as an exhaustive source on this topic, one can distinguish two notable mentions. These are Cibo v2 (23) and an augmented live coding system by Hickinbotham and Stepney (24). Both projects are based on the Tidal language for live coding (25).

Cibo v2 uses a combination of an autoencoder, a variational autoencoder, and an RNN, to produce Tidal code. The (variational) autoencoder is a type of Neural Network that compresses its training data into what is called a latent space, from which one can produce output by choosing a point in this space. The augmented live coding project uses the Extramuros platform for networked live coding sessions (26) combined with an additional server that both checks whether the Tidal patterns submitted by the participants of a performance conform to the Tidal syntax, and generates its own Tidal snippets, based on mutations of the submitted patterns.

LSTM RNN-BASED MUSIC GENERATION

Music generation with the use of LSTM RNNs has seen a lot of development in recent years. Most of the research done in this field combines RNNs or other types of Neural Networks with MIDI symbolic notation. Agrawal et al. use an LSTM RNN to generate music by using MIDI files to train the network on the relationships between chords and notes (27). This project uses Keras, a user-friendly Python module that builds on top of Google’s TensorFlow ecosystem for machine learning (ML), and combines it with the music21 Python module (28) to get note and chord objects from the MIDI files and feed them to the RNN. Chen et al. use an LSTM RNN to generate Guzheng (Chinese Zither) music, again using MIDI data (29). Mots’oehli et al. compare adversarial with non-adversarial training of LSTM RNNs to create music based on a corpus of MIDI data (30). Adversarial refers to a type of AI where two Neural Networks contest each other, and where one network’s gain is the other network’s loss. Non-adversarial refers to more traditional LSTM-RNNs. Their findings suggest that adversarial training produces more aesthetically pleasing music.

Yu et al. create music from lyrics using an LSTM-Generative Adversarial Network (GAN) RNN (31). They extract alignment relationships between lyrics’ syllables and music attributes to create their training corpus from 12,197 MIDI songs. Ycart et al. create binary piano rolls from MIDI files to train an LSTM RNN and then use it to make polyphonic music sequence predictions (32). Li et al. use an LSTM RNN with MIDI data of piano music to generate such music (33). Huang et al. use a combination of a Convolutional Neural Network with LSTM with matrixes produced by MIDI data to generate music (34). Min et al. use a GAN to generate music, to address the problem of LSTM not being able to produce long music sequences (35). Arya et al. also use music21 combined with an LSTM RNN, based on MIDI files to generate music based on part of an existing composition (36). What we can see in this section is that there is much more research based on MIDI data to train the network and generate new content, than research focusing on text generators. This article suggests a new approach, where AI is trained on text instead of MIDI and it is used to generate text that results in a music score. Even though a solid foundation for this system has already been laid – which is analysed in the next sections – there is still a lot to learn and many resources to draw from. With the advent of Large Language Models (LLM) like OpenAI’s ChatGPT, and Google’s Bard, it is interesting to see how the research that has been conducted and published on text generation through AI can enhance a symbolic music-generating project, where symbolic music refers to notes in the Western music notation system.

THE LIVELILY SYSTEM

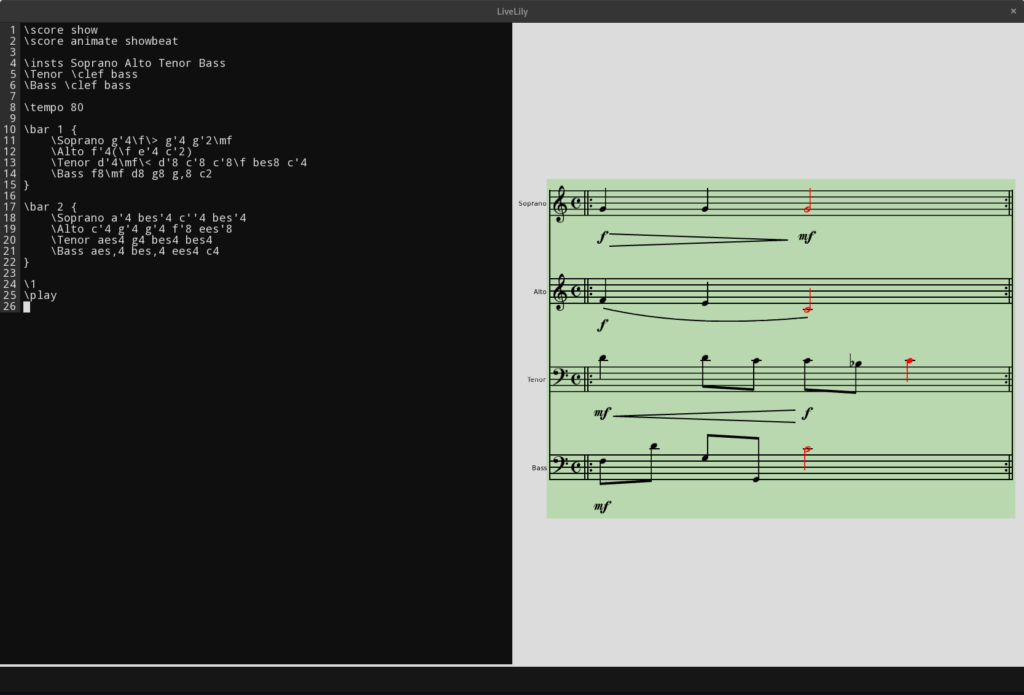

LiveLily is an open-source live sequencing and live scoring software based on a subset of the Lilypond notation. The LiveLily language is a slightly modified version of Lilypond with a bit of syntactic sugar to enable fast typing for live coding sessions. It does not produce any sound but sends OSC or MIDI messages to other software or hardware. The idea was to build a system that enables the user to create a music score similar to a Csound score (37) in the context of controlling a synthesiser. The Lilypond language was chosen because of its expressiveness and the similarity it shares with sheet music jargon and Western music terminology. During the development of LiveLily, I started developing the live scoring part of the software. This part led to the idea of using LiveLily to conduct acoustic ensembles through live coding in live scoring sessions. Even though I have used the Lilypond compiler for live scoring in previous work (38), I opted to develop the live scoring part in openFrameworks which was already used for the text editor of LiveLily. Using openFrameworks enabled both the integration of the score in the editor and its instant display, as the Lilypond compiler takes some time to render even a single bar of music. It also enabled the score to be animated by colour-highlighting active notes and showing the beat with a pulsating rectangle that encapsulates the full score. The figure below illustrates a LiveLily session.

Figure 1: A LiveLily session. Alexandros Drymonitis, 2023.

By using the Lilypond language, LiveLily enables numerous expressive gestures to be included in the sequencer and score. As an example, the code chunk below creates two bars of music for four instruments, and then a loop where the first bar is repeated twice, and then the second bar is played once. This code results in the image above, where the first bar is displayed.

\bar 1 {

\sax c''4\f\>( d''8\mf) fis'' e''4 ees''8 d''

\cello c,4\ff( cis, c,) d,

\bass e,,4\f ees,, e,, f,,

\perc [f'8 c''16*2 a' c'' c''8]*2

}

\bar 2 {

\sax b'8 bes' c''4-. cis''16*4 d''4--

\cello c,4 cis, ees d,

\bass g,,4 fis,, e,, f,,

\perc [<a' c''>16 c''*3 f'8 <a' c''>]*2

}

\loop loop1 {1*2 2}The two bars created with the \bar command include dynamics, diminuendi, and articulation symbols. It is also possible to include arbitrary text in the score, like “flutter” or any other text. All this information is communicated with OSC messages, and it is up to the user how these will be interpreted in the audio software. Most of this information can be communicated with MIDI too, excluding the arbitrary text. In the case of live scoring sessions with acoustic instruments, these semantics result in standard Western music notation symbols that are well-known to Western instrument performers. The syntactic sugar LiveLily adds, concerns the multiplication factors shown in the example code and figure above. Multiplying a note or a group of notes encapsulated in square brackets repeats this figure as many times as the value of the multiplier. This is shown in the \perc line of the second bar in the code chunk above. When a bar name is multiplied, as it appears in the \loop command in the code chunk above, then the entire bar is repeated in the loop.

THE GRU RNN

The GRU RNN of this project is created with the TensorFlow library, and it is inspired by a character-level text generator tutorial provided by its developers. Character-level means that the RNN will generate its content by choosing one character at a time, and predicting which character should follow, based on the previous one, instead of choosing entire words. Since LiveLily does not use MIDI information to create its scores, but its own language with a limited set of standard characters, a character-level text generator seemed like an appropriate approach. The code for creating and running this network is hosted on GitHub. This code must be combined with the LiveLily system.

PREPARING THE CORPUS

Preparing a corpus to train the RNN is a challenging task. If one is to use one’s own music only, then one should write a lot of music before a reasonable corpus can be assembled, as a sufficient training corpus must have at least 100,000 characters, as defined by the developer of the Keras library. In the LiveLily examples provided in this article, a single bar of music for a single instrument approximates to thirty characters. For a four-instrument ensemble, to reach 100,000 characters, one would need to write around 800 bars of music, before a corpus that is large enough has been assembled. It is possible though to use music by other composers to train the network, something that is likely to have interesting results, since it will create a hybrid style composition generator.

Since the RNN will be generating text in the LiveLily language, the training corpus must be written in this language. For the purposes of this project, a Python script has been developed to translate MusicXML into LiveLily files. MusicXML was chosen because it is a format used by many score engraving systems, including Sibelius, Finale, MuseScore, and Lilypond. It is also possible to produce MusicXML files from scores in the corpus of the music21 Python module.

The training corpus should consist of bar definitions only, in the LiveLily language. No other information is required, since the network will be asked to generate only bars, and more information from LiveLily sessions would confuse it. Additionally, the corpus should consist of music written for the same ensemble, so that the predictions of the network are aligned with the ensemble for which this system is used.

GENERATING MELODIC LINES

Once the RNN has been trained, the user can start a live coding session with the assistance of the RNN. Before starting to type, a Python script must be launched that will keep track of all keystrokes, even if this script is run in the background. The pynput Python module can do this.

LiveLily, partly being a text editor, creates a new line when the Return character is hit. The Python script with the pynput module keeps track of all keystrokes, and when the Return key is pressed, the script clears the saved contents and starts from scratch. In LiveLily, hitting Ctl+Return executes whatever is written in the line where the cursor is (or a chunk of code, if the cursor is within curly brackets), without proceeding to a new line, and hitting Shift+Return, it both executes a command and creates a new line. In case of Ctl+Return, the Python script should do nothing. In case of Shift+Return, the script tests the saved content from what has been typed. Since LiveLily supports comments, the following line can be used to let the script know that the user is asking for an AI-generated bar.

%generate \barA comment is used so that there is no error raised by LiveLily when this line is executed, since the software does not include any such command. When this line is executed with Shift+Return, the Python script seeds the RNN with \bar. As it is possible that the RNN produces content that is not entirely correct in a LiveLily context, a sanity check of the AI-generated content is necessary. In this step, the script checks for false instrument names and rhythmic consistency, where it will be ensured that all lines do not sum up to a greater or lesser duration that expected.

To receive the melodic lines generated by the RNN, but to also not change the source code of LiveLily, the Python script must type the generated content to the LiveLily editor. Even though the pynput module can be used as a keyboard too, besides a keyboard listener, it appears that typing with pynput‘s keyboard controller does not work as expected, either because of feedback that occurs through the module’s keyboard controller and listener being simultaneously active, or due to LiveLily not behaving as expected when controlled with this module. What works well is that the Python script “types” the generated content by sending its characters over OSC. LiveLily supports passive control with OSC, so other software can type in it. Once the Python script types the AI-generated content, it stops and does not execute it within LiveLily. This means that it is up to the discretion of the user whether they will use the AI-content intact, whether they will modify it, or whether they will discard it altogether. This setup protects from faulty content that might be produced, even though there is a sanity check before it is “typed”.

PROOF OF CONCEPT

The video below demonstrates the system in use. The RNN of this video was trained with a corpus of Bach’s four-voice chorals, retrieved from the corpus of music21. The scores were converted to the MusicXML format, and then the necessary information, like meter, instrument name, pitch, and duration, were extracted and converted to the LiveLily format. Once each piece was stored as a LiveLily file, a Python script assembled all these files into one big corpus, consisting of 261,116 characters. The RNN was trained for 30 epochs; its training lasted one hour and fifty-one minutes, and it ended up with a loss of 0.2949. The training was done on a machine without GPU support, which demonstrates that it is feasible to use this system without much infrastructure, as a personal computer should be sufficient. The sound in the video is produced by oscillators in Pure Data, receiving the sequencer data via OSC.

Video 1: The GRU RNN generating melodic lines in the LiveLily format, trained with a corpus of Bach’s chorals. Alexandros Drymonitis, 2023.

It is evident that the resulting music does not imitate Bach very convincingly, but it is also evident that the RNN keeps the register of each voice, without generating melodies that are too high for the bass or too low for the soprano, and this applies to all voices. At some point, the network makes a mistake and generates a half-finished bar, which is discarded, as it is up to the user which bars are used in a session. Sometimes it takes longer for the network to generate a bar, and that is probably because of the test each bar undergoes, where if a generated bar is not of the necessary duration, the network is asked to generate another bar, and this procedure is repeated until a bar that is correct has been produced. During the development of this system though, most of the time, the first bar that was generated was correct. This, together with the integrity of the registers, shows that the network has learnt several aspects of the music quite well.

CONCLUSION

Generating textual content with the aid of AI is a practice that, at the time of writing, is seeing a lot of development. Generic LLMs like OpenAI’s ChatGPT and Google’s Bard have drawn a lot of attention in various academic fields and have initiated many research projects. On the other hand, generating music with RNNs is a field with active research where the problem is approached from many angles. Most such research is based on MIDI data to train the network and generate new musical content, while research on AI-generated music through text generation also exists, although this is limited and focused on electronic music, rather than sheet music.

LiveLily is a software for live sequencing and live scoring through live coding with a subset of the Lilypond language. Being able to create music scores live through textual coding can take advantage of the tools that are being created for textual content generation, in a more domain-specific context. Using an RNN with LiveLily enables a different approach to AI-generated music, that of using a character-level GRU RNN to generate text rather than MIDI data. By generating music scores with a Lilypond-based language, many levels of expressiveness can be included, like dynamics, crescendi/diminuendi, articulation symbols, or even arbitrary text that can be interpreted at will by the performer. Combining LiveLily with a GRU RNN can lead to live coding sessions where the live coder uses the network as an instant composition assistant whose melodic line suggestions he/she is free to use, edit or discard.

From a compositional perspective, this project provides the composer with a tool for creation or experimentation, where AI can be used as a companion. One such case is an RNN trained on a hybrid corpus of music, providing musical patterns that blend compositional techniques. A composer can then draw inspiration for their own creations. From a performative perspective, this AI-enhanced version of LiveLily can enable fast creation of patterns in a live coding session, so the live coder can be partially relieved of the strain of generating new content in the context of the strict time constraints of a live session. As research on AI-generated content in various fields is still very active, there is still a lot to learn and a lot of room for refinement of the system presented in this article. Nevertheless, a solid foundation for experimentation has already been laid, that already works as a functional system.

REFERENCES

1. Han Wen Nienhuys and Jan Nieuwenhuizen, “Lilypond, a System for Automated Music Engraving”, Musical Informatics Colloquium Proceedings (2003).

BACK

2. Matthew Wright and Adrian Freed, “Open SoundControl: A New Protocol for Communicating with Sound Synthesizers”, ICMC- International Computer Music Conference Proceedings, pp. 101-104 (1997).

BACK

3. J Austin, A Odena, M Nye, M Bosma, H Michalewski, D Dohan, E Jiang, C Cai, M Terry, Q Le, and C Sutton, “Program Synthesis with Large Language Models”, arXiv:2108.07732 [cs.PL] (2021).

BACK

4. P Knees, T Pohle and G Widmer, “Sound/Tracks: Real-Time Synaesthetic Sonification of Train Journeys”, MM – Multimedia Conference Proceedings, pp. 1117–1118 (2008).

BACK

5. Marinos Koutsomichalis and Bjorn Gambäck, “Algorithmic Audio Mashups and Synthetic Soundscapes Employing Evolvable Media Repositories”, ICCC -International Conference on Computational Creativity Proceedings (2018).

BACK

6. Sanchita Ghose and John J Prevost, “AutoFoley: Artificial Synthesis of Synchronized Sound Tracks for Silent Videos with Deep Learning”, Transactions on Multimedia 23, pp. 1895-1907 (IEEE, 2021).

BACK

7. Tiffany Funk, “A Musical Suite Composed by an Electronic Brain: Reexamining the Illiac Suite and the Legacy of Lejaren A. Hiller Jr.”, Leonardo Music Journal 28, pp. 19–24 (MIT Press, 2018).

BACK

8. Iannis Xenakis, Formalized Music, Thoughts and Mathematics in Composition

(Bloomington: Indiana University Press, 1972).

BACK

9. Curtis Roads, The Computer Tutorial (London: MIT Press, 1996).

BACK

10. G Marino, MH Serra and JM Raczinski, “The UPIC System: Origins and Innovations”,

Perspectives of New Music 31, No. 1, pp. 258–269 (1993).

BACK

11. Jean Bresson, “Reactive Visual Programs for Computer-Aided Music Composition”,

Visual Languages and Human-Centric Computing Symposium Proceedings, pp. 141–144 (2014).

BACK

12. A Hazzard, S Benford and G Burnett, “You’ll Never Walk Alone: Designing a Location-Based Soundtrack”, NIME- New Interfaces for Musical Expression Conference Proceedings, pp. 411–414 (2014).

BACK

13. HY Lin, YT Lin, MC Tien and JL Wu, “Music Paste: Concatenating Music Clips Based on Chroma and Rhythm Features”, ISMIR – International Society for Music Information Retrieval Conference Proceedings, pp. 213–218 (2009).

BACK

14. R Manzelli, V Thakkar, A Siahkamari and B Kulis, “An End to End Model for Automatic Music Generation: Combining Deep Raw and Symbolic Audio Networks”, ICCC – Computational Creativity Conference Proceedings (2018).

BACK

15. Pedro Louzeiro, “The Comprovisador’s Real-Time Notation Interface”, CMMR – Computer Music Multidisciplinary Research Symposium Proceedings, pp. 489–508 (2017).

BACK

16. Kevin C Baird, “Real-Time Generation of Music Notation via Audience Interaction Using Python and GNU Lilypond”, NIME – New Interfaces for Musical Expression Conference Proceedings, pp. 240–241 (2005).

BACK

17. John Eacott, “Flood Tide See Further: Sonification as Musical Performance”, ICMC – International Computer Music Conference Proceedings, pp. 69–74 (2011).

BACK

18. Andrea Agostini and Daniele Ghisi, “Real-Time Computer-Aided Composition with Bach”, Contemporary Music Review 32, No. 1, pp. 41-48 (2013).

BACK

19. Georg Hajdu and Nick Didkovsky, “Maxscore – Current State of the Art”, ICMC – Computer Music Conference Proceedings, pp. 156–162 (2012).

BACK

20. D Fober, Y Orlarey and S Letz, “INScore: An Environment for the Design of Live Music Scores”, LAC – Linux Audio Conference Proceedings, pp. 47-54 (2012).

BACK

21. Jean Bresson, “Reactive Visual Programs for Computer-Aided Music Composition”,

Visual Languages and Human-Centric Computing Symposium Proceedings, pp. 141–144 (2014).

BACK

22. AF Blackwell, E Cocker, G Cox, A McLean and T Magnusson, Live Coding: A User’s Manual (United Kingdom: MIT Press, 2022).

BACK

23. J Stewart, S Lawson, M Hodnick and B Gold, “Cibo v2: Realtime Livecoding A.I. Agent”,

ICLC – International Conference on Live Coding Proceedings, pp. 20–31 (2020). BACK

24. Simon Hickinbotham and Susan Stepney, “Augmenting Live Coding with Evolved Patterns”, in Evolutionary and Biologically Inspired Music, Sound, Art and Design (Springer, Cham, 2016) pp. 31-46.

BACK

25. Alex McLean, “Making Programming Languages to Dance to: Live Coding with Tidal”,

FARM – Functional Art, Music, Modeling & Design Workshop Proceedings, pp. 63–70 (New York, 2014).

BACK

26. D Ogborn, E Tsabary, I Jarvis, A Cárdenas and A McLean, “Extramuros: Making Music in a Browser-Based, Language-Neutral Collaborative Live Coding Environment”, ICLC – International Conference on Live Coding Proceedings International Conference on Live Coding Proceedings, pp. 163–169 (2015).

BACK

27. P Agrawal, S Banga, N Pathak, S Goel and S Kaushik, “Automated Music Generation using LSTM”, INDIAcom – Computing for Sustainable Global Development Conference Proceedings, pp. 1395-1399 (2018).

BACK

28. Michael Cuthbert and Christopher Ariza, “music21: A Toolkit for Computer-Aided Musicology and Symbolic Music Data”, ISMIR – International Society for Music Information Retrieval Conference Proceedings, pp. 637-642 (2010).

BACK

29. S Chen, Y Zhong and R Du, “Automatic composition of Guzheng (Chinese Zither) Music Using Long Short-Term Memory Network (LSTM) and Reinforcement Learning (RL)”,

Scientific Reports 12, No. 15829 (Nature Publishing Group, 2022).

BACK

30. M Mots’oehli, AS Bosman and JP De Villiers, “Comparision Of Adversarial And Non-Adversarial LSTM Music Generative Models”, in Intelligent Computing (Springer, Cam, 2023) pp. 428–458.

BACK

31. Y Yu, A Srivastava and S Canales, “Conditional LSTM-GAN for Melody Generation from Lyrics”, Transactions on Multimedia Computing, Communications and Applications 17, No. 1, pp. 1-20 (IEEE, 2021).

BACK

32. Adrien Ycart and Emmanouil Benetos, “Learning and Evaluation Methodologies for Polyphonic Music Sequence Prediction With LSTMs”, ACM Transactions on Audio, Speech, and Language Processing 28, pp. 1328–1341 (IEEE, 2020).

BACK

33. G Li, S Ding, Y Li, and K Zhang, “Music Generation and Human Voice Conversion Based on LSTM”, MATEC Web of Conferences 336 (2021).

BACK

34. Y Huang, X Huang and Q Cai, “Music Generation Based on Convolution-LSTM”,

Computer and Information Science 11, No. 3, pp. 50-56 (2018).

BACK

35. J Min, Z Liu, L Wang, D Li, M Zhang and Y Huang, “Music Generation System for Adversarial Training Based on Deep Learning”, Processes 10, No. 12 (2022).

BACK

36. P Arya, P Kukreti and N Jha, “Music Generation Using LSTM and Its Comparison with Traditional Method”, Advances in Transdisciplinary Engineering 27, pp. 545-550 (2022).

BACK

37. Victor Lazzarini, Steven Yi, John ffitch, Joachim Heintz, Øyvind Brandtsegg and Iain McCurdy, Csound: A Sound and Music Computing System (Springer, 2016).

BACK

38. Alexandros Drymonitis & Nicoleta Chatzopoulou, “Data Mining / Live Scoring – A Live Performance of a Computer-Aided Composition Based on Twitter”, AIMC – AI Music Creativity Conference Proceedings (2021).

BACK